microsoft DP-300 Exam Questions

Questions for the DP-300 were updated on : Apr 18 ,2025

Page 1 out of 14. Viewing questions 1-15 out of 197

Question 1 Topic 1, Case Study 1Case Study Question View Case

HOTSPOT

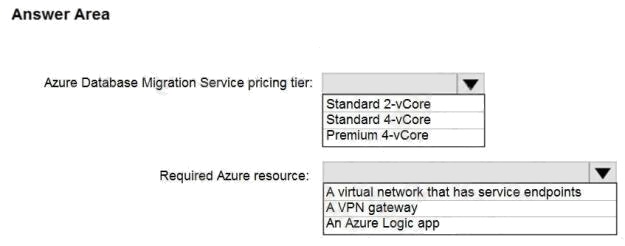

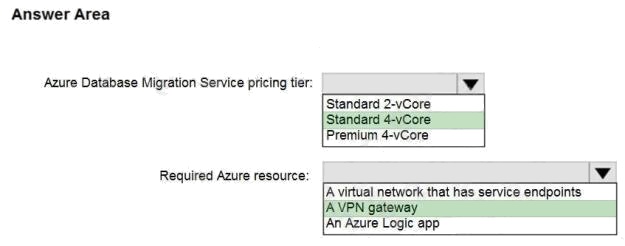

You are planning the migration of the SERVER1 databases. The solution must meet the business requirements.

What should you include in the migration plan? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Explanation:

Azure Database Migration service

Box 1: Premium 4-VCore

Scenario: Migrate the SERVER1 databases to the Azure SQL Database platform. Minimize downtime during the migration

of the SERVER1 databases.

Premimum 4-vCore is for large or business critical workloads. It supports online migrations, offline migrations, and faster

migration speeds.

Incorrect Answers:

The Standard pricing tier suits most small- to medium- business workloads, but it supports offline migration only.

Box 2: A VPN gateway

You need to create a Microsoft Azure Virtual Network for the Azure Database Migration Service by using the Azure

Resource Manager deployment model, which provides site-to-site connectivity to your on-premises source servers by using

either ExpressRoute or VPN.

Reference: https://azure.microsoft.com/pricing/details/database-migration/ https://docs.microsoft.com/en-

us/azure/dms/tutorial-sql-server-azure-sql-online Plan and Implement Data Platform Resources

Question 2 Topic 1, Case Study 1Case Study Question View Case

HOTSPOT

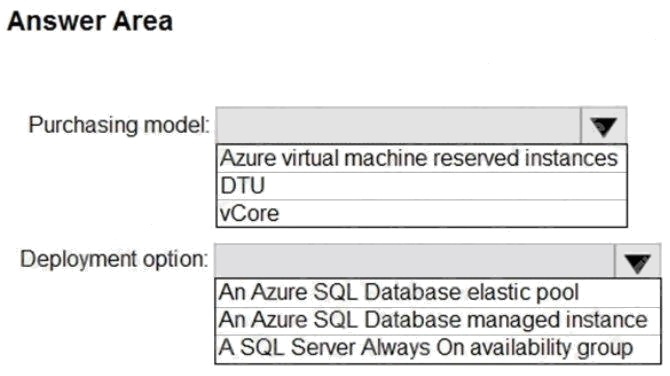

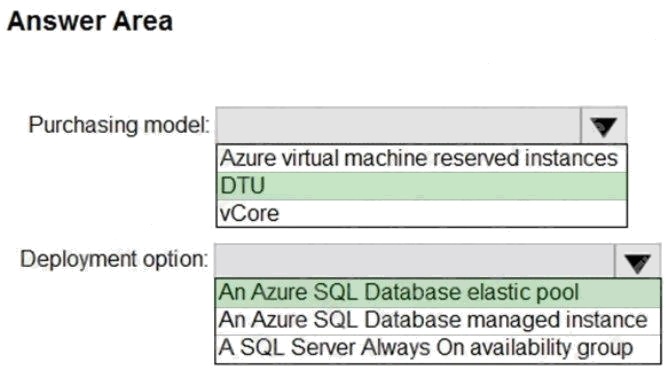

You need to recommend the appropriate purchasing model and deployment option for the 30 new databases. The solution

must meet the technical requirements and the business requirements.

What should you recommend? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Explanation:

Box 1: DTU Scenario:

The 30 new databases must scale automatically.

Once all requirements are met, minimize costs whenever possible.

You can configure resources for the pool based either on the DTU-based purchasing model or the vCore-based purchasing

model.

In short, for simplicity, the DTU model has an advantage. Plus, if youre just getting started with Azure SQL Database, the

DTU model offers more options at the lower end of performance, so you can get started at a lower price point than with

vCore.

Box 2: An Azure SQL database elastic pool

Azure SQL Database elastic pools are a simple, cost-effective solution for managing and scaling multiple databases that

have varying and unpredictable usage demands. The databases in an elastic pool are on a single server and share a set

number of resources at a set price. Elastic pools in Azure SQL Database enable SaaS developers to optimize the price

performance for a group of databases within a prescribed budget while delivering performance elasticity for each database.

Reference: https://docs.microsoft.com/en-us/azure/azure-sql/database/elastic-pool-overview https://docs.microsoft.com/en-

us/azure/azure-sql/database/reserved-capacity-overview Plan and Implement Data Platform Resources

Question 3 Topic 2, Case Study 2Case Study Question View Case

You need to design a data retention solution for the Twitter feed data records. The solution must meet the customer

sentiment analytics requirements.

Which Azure Storage functionality should you include in the solution?

- A. time-based retention

- B. change feed

- C. lifecycle management

- D. soft delete

Answer:

C

Explanation:

The lifecycle management policy lets you:

Delete blobs, blob versions, and blob snapshots at the end of their lifecycles

Scenario:

Purge Twitter feed data records that are older than two years.

Store Twitter feeds in Azure Storage by using Event Hubs Capture. The feeds will be converted into Parquet files.

Minimize administrative effort to maintain the Twitter feed data records.

Incorrect Answers:

A: Time-based retention policy support: Users can set policies to store data for a specified interval. When a time-based

retention policy is set, blobs can be created and read, but not modified or deleted. After the retention period has expired,

blobs can be deleted but not overwritten.

Reference: https://docs.microsoft.com/en-us/azure/storage/blobs/storage-lifecycle-management-concepts

Question 4 Topic 2, Case Study 2Case Study Question View Case

You need to implement the surrogate key for the retail store table. The solution must meet the sales transaction dataset

requirements.

What should you create?

- A. a table that has a FOREIGN KEY constraint

- B. a table the has an IDENTITY property

- C. a user-defined SEQUENCE object

- D. a system-versioned temporal table

Answer:

B

Explanation:

Scenario: Contoso requirements for the sales transaction dataset include:

Implement a surrogate key to account for changes to the retail store addresses.

A surrogate key on a table is a column with a unique identifier for each row. The key is not generated from the table data.

Data modelers like to create surrogate keys on their tables when they design data warehouse models. You can use the

IDENTITY property to achieve this goal simply and effectively without affecting load performance.

Reference: https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-identity

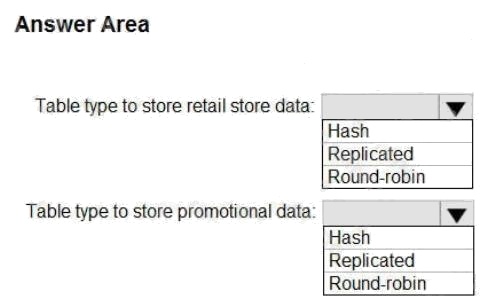

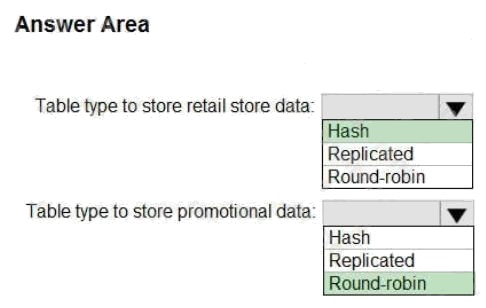

Question 5 Topic 2, Case Study 2Case Study Question View Case

HOTSPOT

You need to design an analytical storage solution for the transactional data. The solution must meet the sales transaction

dataset requirements.

What should you include in the solution? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Explanation:

Box 1: Hash Scenario:

Ensure that queries joining and filtering sales transaction records based on product ID complete as quickly as possible.

A hash distributed table can deliver the highest query performance for joins and aggregations on large tables.

Box 2: Round-robin Scenario:

You plan to create a promotional table that will contain a promotion ID. The promotion ID will be associated to a specific

product. The product will be identified by a product ID. The table will be approximately 5 GB.

A round-robin table is the most straightforward table to create and delivers fast performance when used as a staging table

for loads. These are some scenarios where you should choose Round robin distribution:

When you cannot identify a single key to distribute your data.

If your data doesnt frequently join with data from other tables. When there are no obvious keys to join.

Incorrect Answers:

Replicated: Replicated tables eliminate the need to transfer data across compute nodes by replicating a full copy of the data

of the specified table to each compute node. The best candidates for replicated tables are tables with sizes less than 2 GB

compressed and small dimension tables.

Reference: https://rajanieshkaushikk.com/2020/09/09/how-to-choose-right-data-distribution-strategy-for-azuresynapse/

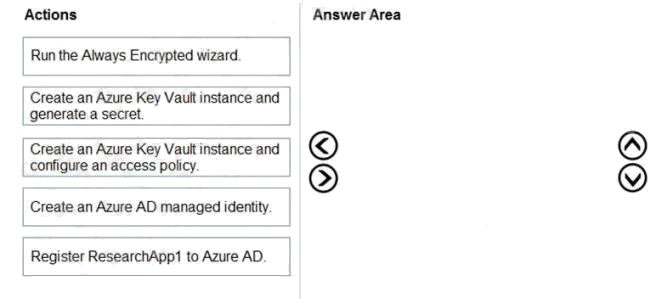

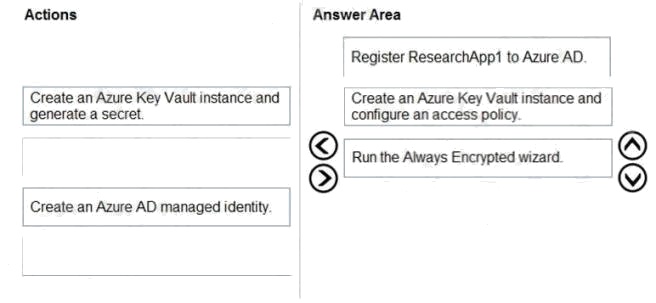

Question 6 Topic 3, Case Study 3Case Study Question View Case

DRAG DROP

You create all of the tables and views for ResearchDB1.

You need to implement security for ResearchDB1. The solution must meet the security and compliance requirements.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the

answer area and arrange them in the correct order.

Select and Place:

Answer:

Explanation:

Reference: https://docs.microsoft.com/en-us/azure/azure-sql/database/always-encrypted-azure-key-vault-

configure?tabs=azure-powershell

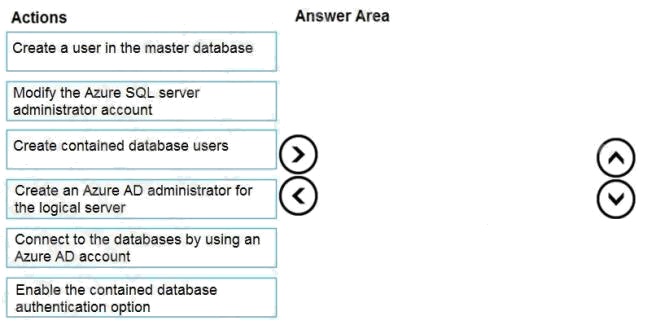

Question 7 Topic 3, Case Study 3Case Study Question View Case

DRAG DROP

You need to configure user authentication for the SERVER1 databases. The solution must meet the security and compliance

requirements.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the

answer area and arrange them in the correct order.

Select and Place:

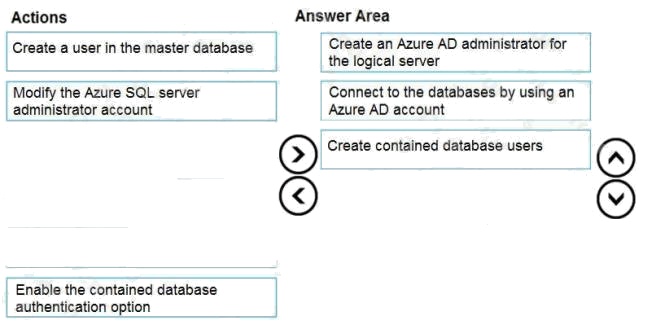

Answer:

Explanation:

Scenario: Authenticate database users by using Active Directory credentials.

The configuration steps include the following procedures to configure and use Azure Active Directory authentication.

1. Create and populate Azure AD.

2. Optional: Associate or change the active directory that is currently associated with your Azure Subscription.

3. Create an Azure Active Directory administrator. (Step 1)

4. Connect to the databases using an Azure AD account (the Administrator account that was configured in the previous

step). (Step 2)

5. Create contained database users in your database mapped to Azure AD identities. (Step 3)

Reference: https://docs.microsoft.com/en-us/azure/azure-sql/database/authentication-aad-configure?tabs=azure-powershell

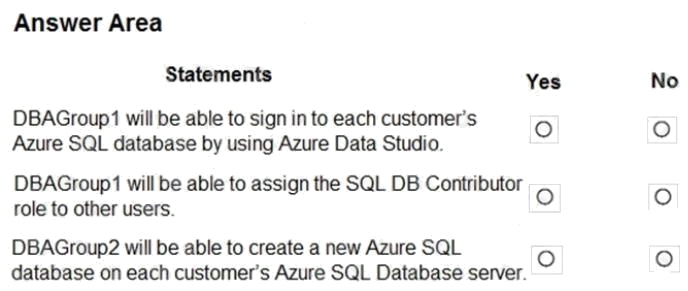

Question 8 Topic 4, Case Study 4Case Study Question View Case

HOTSPOT

You are evaluating the role assignments.

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

Hot Area:

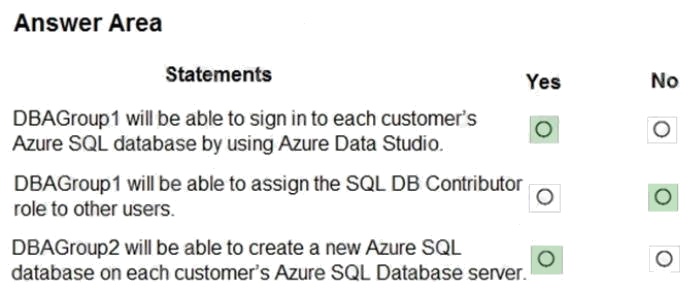

Answer:

Explanation:

Box 1: Yes

DBAGroup1 is member of the Contributor role.

The Contributor role grants full access to manage all resources, but does not allow you to assign roles in Azure RBAC,

manage assignments in Azure Blueprints, or share image galleries.

Box 2: No

Box 3: Yes

DBAGroup2 is member of the SQL DB Contributor role.

The SQL DB Contributor role lets you manage SQL databases, but not access to them. Also, you can't manage their

security-related policies or their parent SQL servers. As a member of this role you can create and manage SQL databases.

Reference:

https://docs.microsoft.com/en-us/azure/role-based-access-control/built-in-roles

Implement a Secure Environment

Question 9 Topic 4, Case Study 4Case Study Question View Case

You need to recommend a solution to ensure that the customers can create the database objects. The solution must meet

the business goals.

What should you include in the recommendation?

- A. For each customer, grant the customer ddl_admin to the existing schema.

- B. For each customer, create an additional schema and grant the customer ddl_admin to the new schema.

- C. For each customer, create an additional schema and grant the customer db_writer to the new schema.

- D. For each customer, grant the customer db_writer to the existing schema.

Answer:

D

Question 10 Topic 4, Case Study 4Case Study Question View Case

You are evaluating the business goals.

Which feature should you use to provide customers with the required level of access based on their service agreement?

- A. dynamic data masking

- B. Conditional Access in Azure

- C. service principals

- D. row-level security (RLS)

Answer:

D

Explanation:

Reference:

https://docs.microsoft.com/en-us/sql/relational-databases/security/row-level-security?view=sql-server-ver15

Question 11 Topic 5, Case Study 5Case Study Question View Case

Based on the PaaS prototype, which Azure SQL Database compute tier should you use?

- A. Business Critical 4-vCore

- B. Hyperscale

- C. General Purpose v-vCore

- D. Serverless

Answer:

A

Explanation:

There are CPU and Data I/O spikes for the PaaS prototype. Business Critical 4-vCore is needed.

Incorrect Answers:

B: Hyperscale is for large databases

Reference: https://docs.microsoft.com/en-us/azure/azure-sql/database/reserved-capacity-overview

Question 12 Topic 5, Case Study 5Case Study Question View Case

Which audit log destination should you use to meet the monitoring requirements?

- A. Azure Storage

- B. Azure Event Hubs

- C. Azure Log Analytics

Answer:

C

Explanation:

Scenario: Use a single dashboard to review security and audit data for all the PaaS databases.

With dashboards can bring together operational data that is most important to IT across all your Azure resources, including

telemetry from Azure Log Analytics.

Note: Auditing for Azure SQL Database and Azure Synapse Analytics tracks database events and writes them to an audit log

in your Azure storage account, Log Analytics workspace, or Event Hubs.

Reference:

https://docs.microsoft.com/en-us/azure/azure-monitor/visualize/tutorial-logs-dashboards

Question 13 Topic 6, Case Study 6Case Study Question View Case

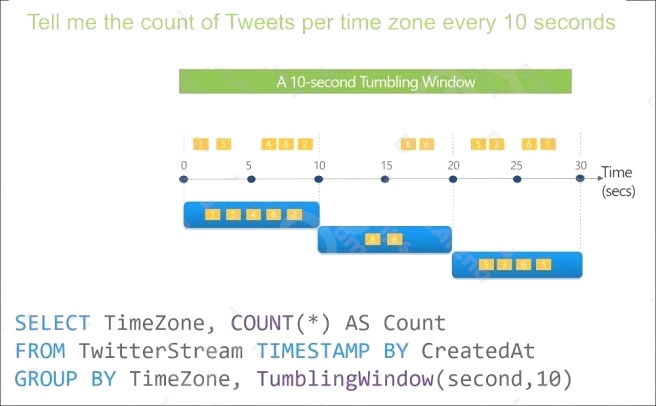

Which windowing function should you use to perform the streaming aggregation of the sales data?

- A. Sliding

- B. Hopping

- C. Session

- D. Tumbling

Answer:

D

Explanation:

Scenario: The sales data, including the documents in JSON format, must be gathered as it arrives and analyzed online by

using Azure Stream Analytics. The analytics process will perform aggregations that must be done continuously, without

gaps, and without overlapping.

Tumbling window functions are used to segment a data stream into distinct time segments and perform a function against

them, such as the example below. The key differentiators of a Tumbling window are that they repeat, do not overlap, and an

event cannot belong to more than one tumbling window.

Reference: https://github.com/MicrosoftDocs/azure-docs/blob/master/articles/stream-analytics/stream-analytics-window-

functions.md

Question 14 Topic 6, Case Study 6Case Study Question View Case

Which counter should you monitor for real-time processing to meet the technical requirements?

- A. SU% Utilization

- B. CPU% utilization

- C. Concurrent users

- D. Data Conversion Errors

Answer:

B

Explanation:

Scenario: Real-time processing must be monitored to ensure that workloads are sized properly based on actual usage

patterns.

To monitor the performance of a database in Azure SQL Database and Azure SQL Managed Instance, start by monitoring

the CPU and IO resources used by your workload relative to the level of database performance you chose in selecting a

particular service tier and performance level.

Reference: https://docs.microsoft.com/en-us/azure/azure-sql/database/monitor-tune-overview

Question 15 Topic 7, Case Study 7Case Study Question View Case

You need to provide an implementation plan to configure data retention for ResearchDB1. The solution must meet the

security and compliance requirements.

What should you include in the plan?

- A. Configure the Deleted databases settings for ResearchSrv01.

- B. Deploy and configure an Azure Backup server.

- C. Configure the Advanced Data Security settings for ResearchDB1.

- D. Configure the Manage Backups settings for ResearchSrv01.

Answer:

D

Explanation:

Reference: https://docs.microsoft.com/en-us/azure/azure-sql/database/long-term-backup-retention-configure